Deepfake technology uses artificial intelligence and machine learning algorithms to generate or manipulate video or audio content to make it appear as if someone said or did something they didn’t. It typically works by training an AI model on a large dataset of real content, such as images or videos, to generate new content that is similar but not identical to the original data. This allows the AI to generate new content that appears to be authentic, but is actually artificially created.

It is difficult to determine which deepfake video has fooled the most people, as deepfakes often go undetected and many are never publicly disclosed or discovered. Additionally, it is challenging to quantify the number of people who have been fooled by a specific deepfake. Some notable deepfake videos that have received widespread attention include ones featuring celebrities like Barack Obama, Jordan Peele, and Gal Gadot, but it is unknown how many people were actually fooled by these videos.

Yes, deepfakes can be dangerous as they can be used to spread misinformation and propaganda, manipulate public opinion, and damage reputations. They can also be used for malicious purposes, such as creating fake evidence in legal cases, or spreading false information in political campaigns. Additionally, deepfakes can contribute to the decline of trust in media and information, making it more difficult for people to determine what is real and what is fake. It is important for individuals, organizations, and governments to be aware of the dangers of deepfakes and to take steps to counter their malicious use.

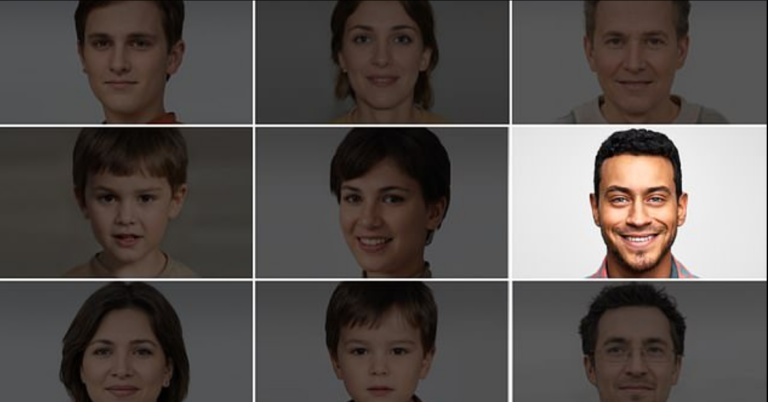

Spot the real image

People create deepfakes for a variety of reasons, some of which include:

Entertainment: Some people create deepfakes as a form of entertainment or to create funny or interesting videos.

Political propaganda: Deepfakes can be used to spread false information or manipulate public opinion for political purposes.

Malicious intent: Deepfakes can be used to defame or harass individuals, or to spread false information for malicious reasons.

Research: Some researchers create deepfakes as a means of exploring and advancing the technology, or to study its potential impact.

Personal gain: Deepfakes can also be used for personal gain, such as for financial fraud or for impersonating someone else for personal gain.

It’s important to note that creating deepfakes for malicious purposes is unethical and illegal in many countries.

Deepfakes are often created using video or audio content. They can be used to manipulate or generate videos and images, and to generate fake speech or voices in audio content. The medium used for deepfakes depends on the specific application and the desired outcome. Some common mediums include:

Video: Deepfakes are often created using video content, either by swapping faces or generating entirely new content.

Audio: Deepfakes can also be created using audio content, such as speech or voices, either by manipulating existing audio or generating new content.

Images: Deepfakes can be created using images, either by generating entirely new images or by manipulating existing images to create fake content.

Overall, the specific medium used for deepfakes depends on the desired outcome and the tools and algorithms available to create the fake content.